07.06.23

"With great power comes great responsibility.”

This aphorism is more relevant today than ever, as we stand on the precipice of a new era in AI technology.

The release of ChatGPT has sparked debate among stakeholders and, in turn, divided public opinion into two camps:

On one side are the enthusiasts. They see generative AI as a game-changer, a breakthrough that promises to

accelerate progress in every field imaginable. They advocate rapid adoption and integration into everyday life.

On the other side are the sceptics who see these advances as a potential threat to humanity. They call for

strict regulation and even a temporary halt to further AI development.

Meanwhile, the CEO of OpenAI, the company behind ChatGPT, Sam Altmann, is lobbying the US Senate for speedy regulation, but at the same time

claims he will leave the EU if the new AI rules are too strict.

Media narratives on the issue have been skewed towards scepticism, often highlighting the most sensational cases, such as the

allegedly deadly rogue drone programme, which appears to be a hoax.

As these voices multiply, they raise important questions about the governance of AI technology, and the European Union (EU) is at the forefront of answering them.

The EU's regulatory approach to AI began to take shape long before ChatGPT was unveiled. The European Commission's goal

was to create a consistent legal framework for the application of AI, harmonise the internal market and stimulate innovation.

A series of expert hearings and studies led to a draft regulation, now known as the AI Act.

The AI Act is currently under intense discussion in the EU Commission, the EU Council and the EU Parliament.

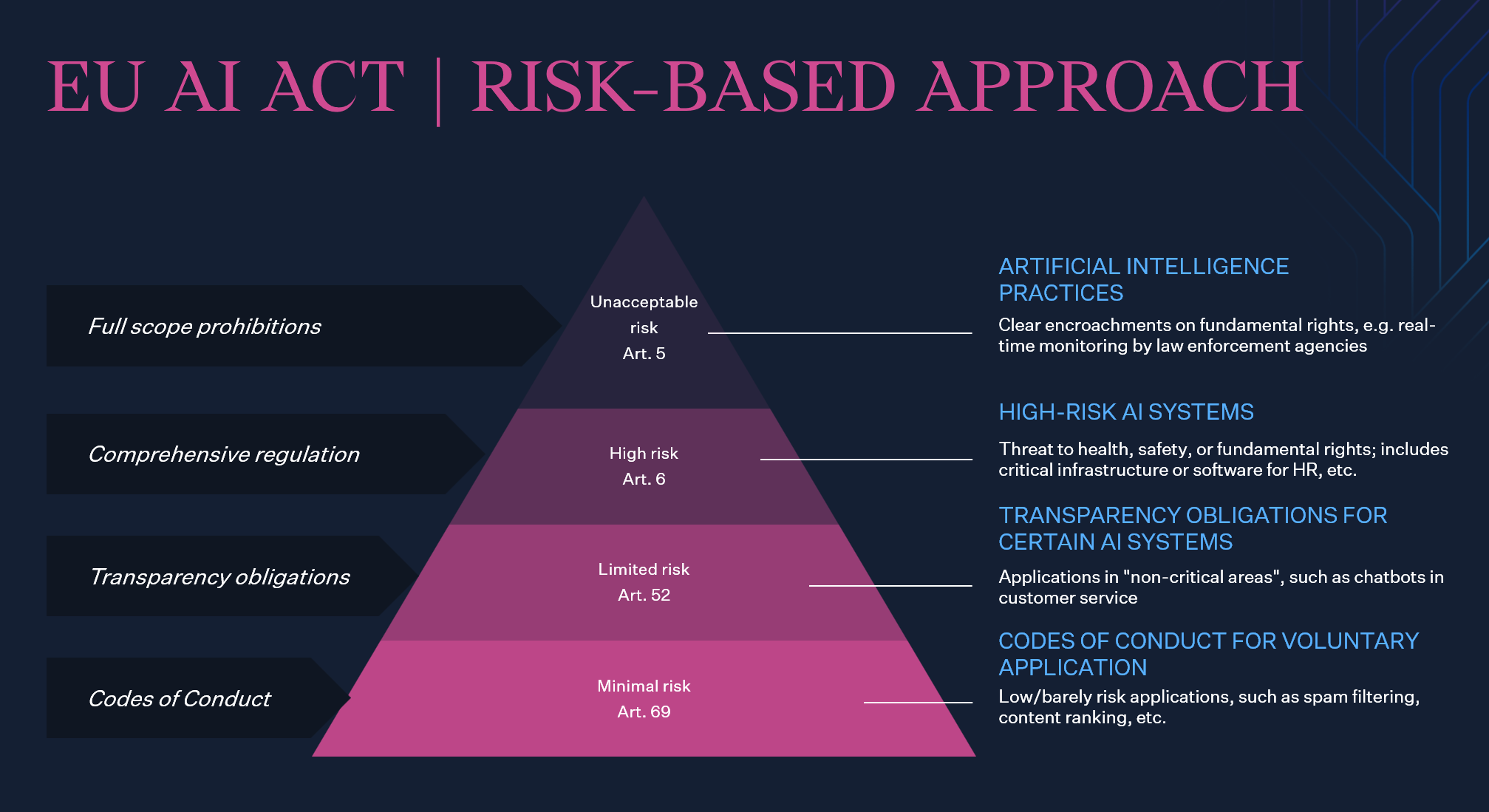

All recent drafts propose risk-based regulation, with AI technologies divided into four categories based on their intended use.

Depending on the risk level, developers, traders and users of AI technologies will have to comply with different risk management measures and

maintain transparency of training data. Certain applications, such as social scoring, are banned outright.

However, some aspects of the Act, particularly those relating to the regulation of foundation models

(the backbone of AI systems such as ChatGPT), have come under fire from the startup community. Critics argue

that the proposed regulations would make it almost impossible to use ChatGPT

and increase the uncertainty surrounding the use of such technologies.

But amid the turmoil, alternatives are emerging that offer viable ways forward. One such example is Aleph Alpha, an AI

model and platform developed and hosted entirely in Germany. This not only ensures strict adherence to Europe's data protection standards,

but also underlines a commitment to EU compliance. Aleph Alpha has made significant strides in AI explainability, an essential aspect of AI governance

that ensures transparency and accountability in the decision-making process of these intelligent systems. This emphasis on explainability

helps end users understand the how and why behind AI decisions, providing an additional layer of trust in the technology.

In addition to Aleph Alpha, we are also seeing a rise in open source models that promote the ethos of collaborative

development and broad accessibility. These models have the added advantage of being very flexible and adaptable.

Because the source code is freely available, they can be tweaked and fine-tuned to suit specific use cases, industries or

regulatory landscapes. Because these models are developed by a global community of developers, they benefit from a wide range of

perspectives and expertise. This collaborative approach accelerates innovation and helps address and mitigate

potential issues early in the development process.

Indeed, these alternative solutions represent an optimistic path that skilfully blends innovation and ethics,

while carefully respecting regulatory boundaries. As we collectively increase our knowledge and refine these

technologies, they have the potential to serve as the foundation for future AI systems.

However, it's important to recognise that these alternatives are not exempt from the provisions of

the forthcoming AI Act, particularly in relation to the training data used for model development. This

regulatory framework will require the utmost transparency, a criterion that applies equally to Aleph Alpha and the

developers behind open-source models. Navigating these requirements will inevitably be a complex task, but it's

a challenge we must take up to ensure the responsible growth of AI in our societies.

As these discussions unfold, one thing is clear: a balanced and meaningful AI governance framework is essential

for the EU. This framework must not only mitigate risks, but also foster innovation. After all, the transformative power

of AI is undeniable. The challenge is to harness it responsibly and ethically for the benefit of the public.

In the coming posts, we will dive deeper into the complexities, opportunities, and challenges of AI. We will strive to bring clarity

amid the cacophony of voices, equipping you with the knowledge and understanding needed to navigate

this brave new world. Stay with us on this exciting journey!